Archive for March, 2009

The first couple of implementations of Mark 22 of the NAG (Numerical Algorithms Group) Library have been released today. Although written in Fortran, this set of highly regarded numerical routines can be called from many languages including Java, Python and Visual Basic. Products also exist to allow you to call the NAG routines from MATLAB and Maple but these haven’t been updated yet.

NAG divide the functionality of their library into a series of chapters such as local optimisation, random number generators and smoothing in statistics. This latest version of the library adds three completely new chapters along with additions to many of the existing ones. The new chapters are

- Wavelet Transforms

- Global Optimisation (That’s right – GLOBAL optimisation! No longer are you restricted to local optimisation problems)

- Further linear algebra support routines

Of the 192 new functions that NAG have added to this release some of the ones that caught my eye include

- Evaluation of Lambert’s W function for real values.

- A new routine for computing the matrix exponential of a real-valued matrix.

- A routine to compute the nearest correlation matrix to a real square matrix.

- A suite of routines for evaluating various option pricing formulae.

- A new routine for performing ProMax rotations.

- Improved quasi and pseudo random number generators.

A full description of all of the new stuff can be found on NAG’s website. So far you can only get 32bit and 64bit Linux versions of Mark 22 but I expect other versions to be available soon.

I make no attempt to hide the fact that I am a big fan of NAG and their products and this latest release adds a lot of great new functionality. Enjoy!

Over at The Endevour, John Cook considers the martial arts movie Redbelt which differentiates between martial arts competitions and real fights. Competitions tend to have artifically imposed restrictions (aka ‘the rules’) whereas real fights don’t. In his post, John likens this to the difference between real world maths problems and academic maths problems.

Taking the martial arts analogy one step further – I was reminded of a quote from Bruce Lee while reading John’s post.

‘Before I studied the art, a punch to me was just like a punch, a kick just like a kick. After I learned the art, a punch was no longer a punch, a kick no longer a kick. Now that I’ve understood the art, a punch is just like a punch, a kick just like a kick.’

I used to practise Taekwondo back in the day and I can relate to what Bruce was saying. When you start Taekwondo you expect to be taught how to kick, and you are, you are taught how to do a ‘side kick’ and a ‘front snap kick’ and a ‘turning kick’ and an ‘axe kick’ and a…..well you get the idea. A kick for every occasion.

All these kicks to learn with each one having its own set of detailed technical nuances. I spent years developing the perfect ‘spinning reverse turning kick’ for example and got seriously good at it – everyone was impressed with my kick. It was a beautiful, powerful, amazing kick and I was proud of it.

However, I rarely managed to hit anyone with it in a competition scenario. Of course if I ever did score a point with it then the crowd would go crazy – it looked great and they loved it. It just didn’t happen very often. Futhermore, in a real fight I would be a moron to even think about using it. It was too inefficent, too slow and put me at too much risk. It involved briefly having my back to my opponent while doing the spin for pity’s sake.

Over the years of learning these fancy techniques I somehow missed the point…which was to learn how to plant your foot on your opponent as fast and powerfully as possible with the minimum of fuss. All the tehncial paraphernalia was just a means to an end but I got caught up trying to develop the ‘perfect kick’ for its own sake. The application didn’t concern me.

I never got very good at winning fights but I had a whole load of fun and that’s what I really did Taekwondo for.

Back to mathematics….

When solving a mathematical problem how do you go about it? Do you insist on always using certain favourite techniques to get the job done? Will you only accept and publish the answer if you reached it by some clever, beautiful and impressive means? In short are you perfecting the perfect spinning reverse turning kick which will only work occasionally but when it does everyone oohs and aaahs at your technical prowess.

On the other hand will you use any dirty, sloppy and downright underhanded method in your arsenal to solve the thing? Who cares how you get the answer as long as you get it? Street Fighting Mathematics maybe?

Personally, with both Mathematics and Martial arts, I don’t think that there is a ‘right way to do it’ just as long as you are aware of what you are doing.

Near the end of last week I was acting as teaching assistant for a MATLAB course and some of the students wanted to know if there were any viable free, open source alternatives to MATLAB. I duly listed what I considered to be the standard set – Scilab, Octave, SAGE and Python – which are more or less MATLABy depending on your point of view.

One student asked ‘What about R?’ and I had to confess that I didn’t know much about it except that it is used a lot by statisticians and is essentially a free, open source version of S+. I ventured the opinion that maybe R was too specialised to be considered a general MATLAB alternative with the caveat that I didn’t actually know what I was talking about.

It seems that there is nothing a student likes more than to teach the teacher and so much googling ensued. I was pointed to a small set of speed comparisons between MATLAB and R for example which includes some matrix operations like finding eigenvalues and generating Toeplitz matrices. You don’t get much more MATLABy than matrices! Other articles such as this comparison between various data analysis packages also proved interesting and useful.

So, thanks to a line of questioning from some MATLAB students, I have yet another thing on my to-do list – find out more about R. What do you think? Can R replace MATLAB sometimes?

Some time ago now I wrote about an integral that Mathcad 14 had trouble with and also asked if anyone could solve it by hand.

![]()

Well, James Graham-Eagle of the University of Massachusetts has risen to the challenge and provided me with the full solution as a pdf file which I offer to you all for your downloading pleasure. Thanks James!

Say you want a vector that starts at 0 and goes to 1 in steps of 0.1

There are two ways you might do this in MATLAB

x=0:0.1:1

y=linspace(0,1,11)

If you display either x or y then you will get

[0 0.1000 0.2000 0.3000 0.4000 0.5000 0.6000 0.7000 0.8000 0.9000 1.0000]

So it looks like we are in clover. However if we do

x==y

we get

[1 1 1 0 1 1 1 1 1 1 1]

Which shows that the vectors x and y are not EXACTLY equal for all values (1 stands for true and 0 stands for false so one value is different). We can see that the difference is extremely small by doing

x-y

1.0e-16 *

0 0 0 0.5551 0 0 0 0 0 0 0

This tiny difference is almost certainly caused by the fact that the colon operator and linspace use slightly different methods to calculate the vectors as pointed out in the newsgroup post where I discovered this little piece of trivia. . If floating point arithmetic was exact then it wouldn’t matter what algorithm you used to calculate a vector like this – the result would always be the same as you would expect.

However, floating point arithmetic isn’t exact and so things like the order of operations matters. This sort of thing has been discussed many times by people considerably more erudite than me so I refer you to them for details.

What I was curious about was ‘How,exactly, does the colon operator calculate it’s values and how does this differ from linspace?’ Oh yes, I am a sucker for mathematical trivia.

If I didn’t have access to either of these methods in MATLAB then I would calculate our vector like this

n=0:10

a=n*0.1

But it turns out that this isn’t equal to either x or y so this isn’t how linspace or the colon operator works:

a==x (compare to colon operator)

1 1 1 1 1 1 0 0 1 1 1

a==y (compare to linspace)

1 1 1 0 1 1 0 0 1 1 1

Another way for calculating this vector that springs to mind is to start with 0 and repeatedly add 0.1 until you reach 1. I normally would never do it like this due to the possibility of accumulating rounding errors on each addition but let’s take a look.

b=[zeros(1,11)];

for i=2:11

b(i)=b(i-1)+0.1;

end

As I’d expect, this isn’t how MATLAB does it either.

b==x (compare to colon operator)

1 1 1 1 1 1 1 1 0 0 0

b==y (compare to linspace)

1 1 1 0 1 1 1 1 0 0 0

My methods don’t agree with each other either (do a==b to see) but I was expecting that.

So, I am non the wiser. How does linspace and the colon operator work? Thoughts and comments would be welcomed.

The second edition of the new blog carnival ‘Math teachers at play’ has been posted over at ‘Let’s play math’. Although I am not a maths teacher, I do like to play with math and there is certainly a lot to play with in this carnival. Why not head over there to see what is on offer?

If you are the owner of a maths based blog and would like one or more of your posts included in the next edition of this carnival – (which will be over at f(t) on March 20th) – then simply submit something via the submission form. Carnivals such as these are a great way to get involved with the wider blogging community and are also a good source of inspiration.

Enjoy!

The math software releases are coming thick and fast at the moment. This latest release of Mathworks’ flagship program comes with a huge number of improvements but possibly the most important in my mind right now is the following.

- Utilization of eight cores on your desktop with Parallel Computing Toolbox (PCT)

When you use the Parallel Computing Toolbox with MATLAB 2008b or below, you can only make use of up to 4 cores simultaneously. This was starting to become a problem for users at my workplace because dual quad core machines are becoming increasingly common around there.

I installed the PCT (at a cost of 192 pounds +VAT for the network licensed version) for the owner of such a machine a few months back and we immediately hit upon the 4 core limit of MATLAB 2008a. He had 8 cores and wanted to use them so I fired off an email to Mathworks support for advice. I figured that we would have to pay another 192 pounds for another 4 core PCT but I was wrong. So, very very wrong.

Cutting a long story short we were told that if we wanted to use 8 cores then we would have to buy 8 licenses for their dsitributed computing server product at a total cost of 1400 pounds + VAT. Ouch!

So…to summarize – the pricing structure we were being offered for MATLAB 2008a was

- Parallelisation over 4 cores cost 192 +VAT or 48 pounds per core

- Parellisation over 8 cores cost 1400 + VAT or 175 pounds per core

I was rather stunned by this outcome and said as much to my long-suffering Mathworks account manager (who has the patience of a saint I think – I’d like to take this opportunity to publicly thank her for putting up with me).

Anyway, this has been fixed in 2009a and the PCT can now use up to 8 cores and so this particular toolbox is now twice as powerful as it was before.

Great news all round I think.

If you enjoyed this article, feel free to click here to subscribe to my RSS Feed.

I needed to profile some MATLAB code recently to try and optimise it. It’s been a while since I have done this sort of thing so I first reminded myself of the details by reading the documentation. Eventually I came to a sage piece of advice concerning multi-core processors…

Note If your system uses Intel multi-core chips, you may want to restrict the active number of CPUs to 1 for the most accurate and efficient profiling. See Intel Multi-Core Processors — Setting for Most Accurate Profiling for details on how to do this.

‘Sounds fair enough‘ I thought so I clicked on the link and started reading the instructions. The first couple of steps were

1. Open Windows Task Manager.

2. On the Processes tab, right-click MATLAB.exe and then click Set Affinity.

Outrage! How dare they assume I am using Windows ;) A bit of searching through the documentation revealed that, sure enough, The Mathworks had forgotten about us Linux users in this particular instance. Mac users are left in the cold too for that matter.

So, I fired off a quick email to Mathworks support and asked them how I should proceed with setting the processor affinity as a Linux user. I got a reply within just a couple of hours. Here it is in full (edited very slightly by me) for anyone else who needs to do this:

The Linux taskset command (part of a scheduler utilities package) can be used to set the processor affinity for a certain Process ID (PID). To find MATLAB’s PID, you can execute

ps -C MATLAB

from a Linux terminal. Then, to set the processor affinity for MATLAB to one CPU (e.g. CPU #0), execute (also from a Linux terminal):

taskset -pc 0 PIDhere

The ‘-p’ option specifies that taskset should operate on an existing PID instead of creating a new task. The ‘-c’ option allows you to specify a list of processor numbers instead of a bitmask representing the processors you would like to use. For more information on the syntax of taskset, it is best to look at the manpage for this command (i.e. by executing man taskset from a Linux terminal).

The support analyst (who wishes to remain anonymous) who was helping me out then informed me that he had raised a request for enhancement with the Mathworks documentation team to try and get this information covered in future versions of the MATLAB docs.

I’m impressed with this level of service so thank you to everyone involved at the Mathworks for solving this little issue of mine.

One of my informants told me to expect something big from Wolfram Research in the near future but didn’t tell me what it was. No matter how hard I tried, she wouldn’t give me any details apart from ‘Stephen is very excited about it‘ and ‘A surprise announcement will be coming soon.‘ She sure knows how to get me intrigued.

Well, now the secret is out…sort of. Stephen Wolfram has just made a blog post about a project he has been working on for a few years called Wolfram Alpha.

I’ll be honest with you – I’ve read the blog post and I’m still not sure what this is all about but with phrases like:

…Fifty years ago, when computers were young, people assumed that they’d quickly be able to handle all these kinds of things. And that one would be able to ask a computer any factual question, and have it compute the answer.

and

It’s going to be a website: www.wolframalpha.com. With one simple input field that gives access to a huge system, with trillions of pieces of curated data and millions of lines of algorithms.

I am even more intrigued. Nothing is live yet – this is just an appetizer announcement but I am really looking forward to finding out more about what this is all about.

Any thoughts?

Update (18th March 2009): Doug Lenat has also seen a demo of the system and has discussed it in depth at Semantic Universe including the sort of questions that Wolfram Alpha won’t be able to answer.

John Hawks wonders if Wolfram Alpha will make bioinformatics obsolete? I don’t know enough about bioinformatics to comment but it’s an interesting article.

Update (9th March 2009): Someone with better connections than me has seen it in action. Check out this report for more details.

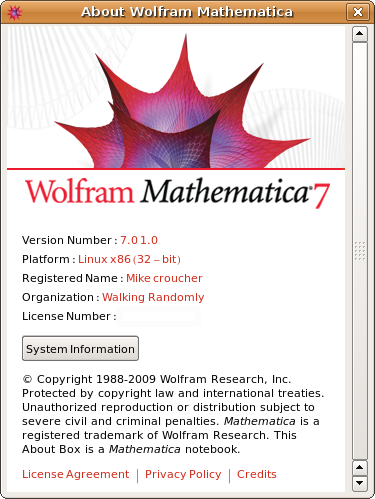

Look what I’ve got :)

News on what’s new in Mathematica 7.0.1 is sparse at the moment but I’m working on it. The following is lifted from Wolfram’s site:

- Performance enhancements to core image-processing functions

- Right-click menu for quick image manipulation

- New tutorials, “How to” guides, and screencasts

- Thousands of new examples in the documentation

- Improved documentation search

- Integration with mathematical handwriting-recognition features of Windows 7

- Integration with the upcoming release of gridMathematica Server

Expect a follow up post soon.