Archive for May, 2011

MATLAB’s lsqcurvefit function is a very useful piece of code that will help you solve non-linear least squares curve fitting problems and it is used a lot by researchers at my workplace, The University of Manchester. As far as we are concerned it has two problems:

- lsqcurvefit is part of the Optimisation toolbox and, since we only have a limited number of licenses for that toolbox, the function is sometimes inaccessible. When we run out of licenses on campus users get the following error message

??? License checkout failed. License Manager Error -4 Maximum number of users for Optimization_Toolbox reached. Try again later. To see a list of current users use the lmstat utility or contact your License Administrator.

- lsqcurvefit is written as an .m file and so isn’t as fast as it could be.

One solution to these problems is to switch to the NAG Toolbox for MATLAB. Since we have a full site license for this product, we never run out of licenses and, since it is written in compiled Fortran, it is sometimes a lot faster than MATLAB itself. However, the current version of the NAG Toolbox (Mark 22 at the time of writing) isn’t without its issues either:

- There is no direct equivalent to lsqcurvefit in the NAG toolbox. You have to use NAG’s equivalent of lsqnonlin instead (which NAG call e04fy)

- The current version of the NAG Toolbox, Mark 22, doesn’t support function handles.

- The NAG toolbox requires the use of the datatypes int32 and int64 depending on the architecture of your machine. The practical upshot of this is that your MATLAB code is suddenly a lot less portable. If you develop on a 64 bit machine then it will need modifying to run on a 32 bit machine and vice-versa.

While working on optimising someone’s code a few months ago I put together a couple of .m files in an attempt to address these issues. My intent was to improve the functionality of these files and eventually publish them but I never seemed to find the time. However, they have turned out to be a minor hit and I’ve sent them out to researcher after researcher with the caveat “These are a first draft, I need to tidy them up sometime” only to get the reply “Thanks for that, I no longer have any license problems and my code is executing more quickly.” They may be simple but it seems that they are useful.

So, until I find the time to add more functionality, here are my little wrappers that allow you to do non linear least squares fitting using the NAG Toolbox for MATLAB. They are about as minimal as you can get but they work and have proven to be useful time and time again. They also solve all 3 of the NAG issues mentioned above.

To use them just put them both somewhere on your MATLAB path. The syntax is identical to lsqcurvefit but this first version of my wrapper doesn’t do anything more complicated than the following

MATLAB:

[x,resnorm] = lsqcurvefit(@myfun,x0,xdata,ydata);

NAG with my wrapper:

[x,resnorm] = nag_lsqcurvefit(@myfun,x0,xdata,ydata);

I may add extra functionality in the future but it depends upon demand.

Performance

Let’s look at the example given on the MATLAB help page for lsqcurvefit and compare it to the NAG version. First create a file called myfun.m as follows

function F = myfun(x,xdata) F = x(1)*exp(x(2)*xdata);

Now create the data and call the MATLAB fitting function

% Assume you determined xdata and ydata experimentally xdata = [0.9 1.5 13.8 19.8 24.1 28.2 35.2 60.3 74.6 81.3]; ydata = [455.2 428.6 124.1 67.3 43.2 28.1 13.1 -0.4 -1.3 -1.5]; x0 = [100; -1] % Starting guess [x,resnorm] = lsqcurvefit(@myfun,x0,xdata,ydata);

On my system I get the following timing for MATLAB (typical over around 10 runs)

tic;[x,resnorm] = lsqcurvefit(@myfun,x0,xdata,ydata); toc Elapsed time is 0.075685 seconds.

with the following results

x = 1.0e+02 * 4.988308584891165 -0.001012568612537 >> resnorm resnorm = 9.504886892389219

and for NAG using my wrapper function I get the following timing (typical over around 10 runs)

tic;[x,resnorm] = nag_lsqcurvefit(@myfun,x0,xdata,ydata); toc Elapsed time is 0.008163 seconds.

So, for this example, NAG is around 9 times faster! The results agree with MATLAB to several decimal places

x = 1.0e+02 * 4.988308605396028 -0.001012568632465 >> resnorm resnorm = 9.504886892366873

In the real world I find that the relative timings vary enormously and have seen speed-ups that range from a factor of 14 down to none at all. Whenever I am optimisng MATLAB code and see a lsqcurvefit function I always give the NAG version a quick try and am often impressed with the results.

My system specs if you want to compare results: 64bit Linux on a Intel Core 2 Quad Q9650 @ 3.00GHz running MATLAB 2010b and NAG Toolbox version MBL6A22DJL

More articles about NAG

This is the third in an ongoing series of articles where I take a look at some of the mathematical applications available for iPad. Click here for part 1 and here for part 2. If you are the author of a mathematical iPad app that you’d like me to review then feel free to contact me. Also, if you use any mathematical iPad app regularly and think that its awesome then contact me and let me know why.

Wolfram Alpha and the Wolfram Course Assistants

At the time of writing, Wolfram Research have several iPad apps available including the following:

- Wolfram Alpha

- Wolfram Music Theory Course Assistant

- Wolfram Calculus Course Assistant

- Wolfram Algebra Course Assistant

- Wolfram Astronomy Course Assistant

- Wolfram Multivariable Calculus Course Assistant

All of these apps are interfaces to Wolfram Alpha, the fantastic computational engine that I fell in love with upon its release. Sadly, the apps themselves do not live up to the quality that I have come to expect from Wolfram Research and, as one iTunes reviewer put it, they are very thin-soup. In my opinion, the only one worth buying is the Wolfram Alpha app and even that is questionable since you could just access Wolfram Alpha directly (and for free) from your iPad web-browser. I’ll admit that the keyboard provided by the app is occasionally useful though.

The course assistants offer no additional content compared to the Wolfram Alpha app (or the website); they simply offer a menu driven way to generate search queries that are then sent to Wolfram Alpha. I would spend my money elsewhere if I were you.

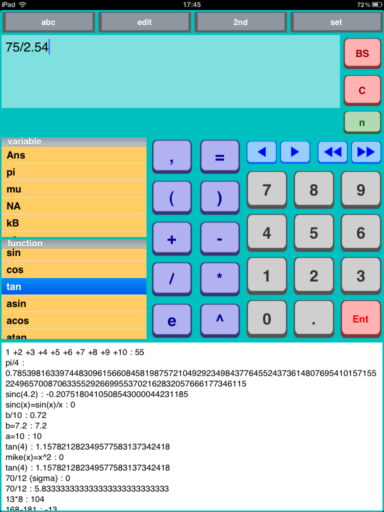

slcalc – ‘Kazuo Nakazato’

In my original Math on the iPad article I focused on all singing, all dancing computer algebra apps but sometimes all you need is a good, old fashioned calculator. There are hundreds available on the appstore and slcalc is my current favourite. slcalc has got a long history for a mobile app since its first outing was on the Linux-based Sharp Zaurus back in 2003. The current iPad version is great and includes a long calculation history, the ability to use variables, arbitrary precision calculation (up to 256 digits), big buttons and a reasonable set of functions. You can even program it (and here are some example programs). All this for $1.99 (there is a more limited free version also). I guess my only minus point would be that the colour scheme is a bit..um…odd! Oh, and it can’t do complex arithmetic either but they are only minor things that detract from an otherwise great app.

Here are the iTunes links

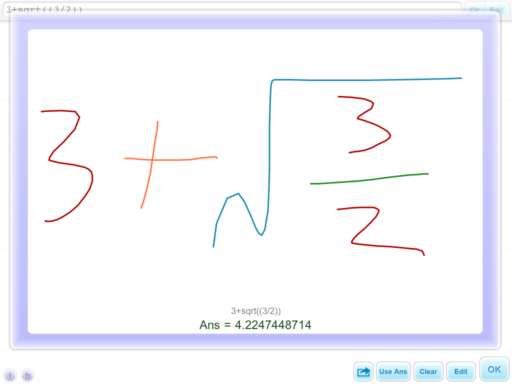

Handwriting Calculator – ‘Old Men’

The idea is brilliantly simple, you write the calculation that you want to perform directly onto your iPad’s screen and the iPad finds the result. No need to learn programming syntax or which button to press next, just write and calculate. Sadly, the reality isn’t quite so brilliant.

There are a limited number of functions (Basic arithmetic, square root, factorial and power) and the handwriting recognition is a bit flaky although I have to admit that my handwriting is probably more of a challenge than most. I also find myself wishing that I could use a stylus to write with since using my finger just doesn’t feel as precise. Furthermore, it turns out that I can punch numbers into a traditional calculator (such as slcalc above) MUCH faster than I can write them down.

In summary, its a nice idea and fun to play with for a couple of minutes but it just isn’t very useful and not worth the $1.99 asking price. iTunes link:

I do the administration for the Carnival of Mathematics and am very happy to announce that the 77th edition has been published over at Jost a Mon. If you are unsure what a Math carnival is then check out my introductory article or just read some past editions from either the Carnival of Math itself or its sister publication, Math Teachers at Play which is run by Denis of Let’s Play Math fame.

The next Carnival of Math is scheduled to be hosted over at JimWilder.com and the submission form for articles is open now. If you’d like to host a future carnival of math on your blog or website then please contact me for further details.

A while ago I wrote an article on comparing mobile phones with ancient supercomputers and today I learned that Jack Dongarra has run his Linpack benchmark on the iPad 2 and discovered that it has enough processing power to rival the Cray 2; the most powerful supercomputer in the world back in 1985. According to Jack, the iPad 2 is so powerful that it would have stayed in the top 500 list of world’s most powerful super computers until 1994. That’s a lot of power!

Other iPad articles on WalkingRandomly

Updated January 4th 2011

It is becoming increasingly common for programmers to make use of GPUs (Graphical Processing Units) to speed up their programs substantially. There are three major low-level programming libraries that allow you to do this in languages such as C; namely CUDA, OpenCL and Microsoft DirectCompute. Of these three, CUDA is the most developed but it only works on Nvidia graphics cards.

I am often asked if the major commercial math packages support GPU computing and I find myself writing the same summary email over and over again. So, here is a very brief breakdown of what is currently on offer. I plan to expand the information contained in this page over time so if you have any information about GPU computing in these packages then let me know.

MATLAB

Core MATLAB contains no support for GPU computing but several organizations (including The Mathworks themselves) have produced add-on toolboxes that add such support:

- Jacket – This is a product from a company called AccelerEyes and is possibly the most advanced and well developed GPU solution for MATLAB currently available. As of version 2.0 it supports both OpenCL and CUDA frameworks.

- The Mathworks’ Parallel Computing Toolbox (PCT) – If you want to do your MATLAB GPU computing the officially supported way then this is the product you need. As a bonus, it also allows you to make better use of the multicore processor that almost certainly resides in your machine. Like many of the offerings on this page, only the CUDA framework is supported so you are out of luck if you don’t have an NVidia graphics card. Even if you do have an NVidia graphics card then you still might be out of luck since the PCT only supports cards that have compute level 1.3 or above (i.e. double precision only).

- CULA is a set of GPU-accelerated linear algebra libraries utilizing the NVIDIA CUDA parallel computing architecture and it has a MATLAB interface.

- GPUmat – This product is completely free but is less developed than the commercial offerings above. Again. it is CUDA only

- OpenCL toolbox – The only OpenCL solution for MATLAB I could find. It is free but development seems to have stalled.

Mathematica

Mathematica 8 has support for both CUDA and OpenCL built in so no need for any add-ons. Furthermore, it supports both single and double precision GPUs so you can experiment with GPU computing on older, cheaper cards.

Maple

Maple has had some CUDA-only GPU support since version 14. On the face of it, the CUDA package only appears to contain one accelerated function–Matrix-Matrix multiplication– but when you load this function it accelerates many functions that use matrix-matrix multiply internally. I’ve never found a definitive list of such functions though.

Mathcad

Mathcad 15 and Mathcad Prime have no support for GPU enhanced computing.

Welcome to the slightly delayed 4th edition of ‘A Month of Math Software’. If you have some math software news that you’d like including in a future edition then let me know. Previous articles can be found in the archive.

News

Wolfram Research have acquired a company called MathCore Engineering AB – http://www.mathcore.com/. The practical upshot of this is that we can expect future Mathematica versions to contain Simulink-like functionality. Wolfram’s press-release is at http://www.wolfram.com/news/mathcoreaquired.html and Stephen Wolfram spoke about this at http://blog.wolfram.com/2011/03/30/launching-a-new-era-in-large-scale-systems-modeling/.

Commercial releases

MATLAB 2011a was released by The Mathworks earlier this month. There have been a lot of changes around various toolboxes along with the usual performance enhancements and so on. I’ll be doing a write up of it at some point but, for now, here are the release highlights .

Maplesoft’s Maple has seen a new major version. Maple 15 has got lots of new goodies. Check them out at http://www.maplesoft.com/products/maple/new_features/ which includes lots of examples of how Maple 15 is better than previous versions (and, in some cases, the competition).

The popular data analysis and plotting application, Origin, has seen an upgrade to version 8.5.1. The what’s new list is at http://www.originlab.com/index.aspx?go=Products/Origin&PID=1750 This package is a firm favourite of users at my workplace, The University of Manchester. It’s just a shame that it is Windows only. Ho hum!

HSL 2011 has been released; the first major release in 4 years. From the website: “HSL (formerly the Harwell Subroutine Library) is a collection of state-of-the-art packages for large-scale scientific computation written and developed by the Numerical Analysis Group at the STFC Rutherford Appleton Laboratory and other experts.” Although this is a commercial library, it is free for academic use.

I’ve been writing ‘A month of Math Software’ for four months now and you can always rely on the commercial computational algebra system, Magma, to supply us with some news. The v2.17-6 release change log is at http://magma.maths.usyd.edu.au/magma/releasenotes/2/17/7/

Open Source releases

One of the most powerful statistical programming languages in existence, R, has seen a new major release. Version 2.13 was released on April 13th. One of the biggest new developments is a new bytecode compiler for R which has been demonstrated and benchmarked over at Thinking Inside the Box. The huge list of changes is available in the NEWS file.

Maxima, the free open source computer algebra system for Windows, Mac and Linux, was upgraded to version 5.24 earlier this month. The changelog is available at http://maxima.cvs.sourceforge.net/viewvc/maxima/maxima/ChangeLog-5.24