Archive for July, 2011

Welcome to July’s Month of Math Software, my regular summary of news and releases in the world of software for the mathematically inclined. If you have a news item that you’d like to share in next month’s offering then feel free to contact me to discuss it. If you like what you see here then take a look at some of the previous editions.

Commercial Software Releases

- VSN International have released version 14 of their data analysis tool, Genstat for the biosciences. Interestingly, they also have a cut-down free version of Genstat for teaching use in schools and Universities.

- Jacket, the most feature-complete GPU toolbox for MATLAB, was updated to version 1.8 this month with a whole host of new features and functions. Jacket’s sister project, libJacket– a GPU library for C/C++/Fortran and Python, also saw an update to version 1.1.

- Version 12 of the general purpose statistical package, STATA, has been released. Click here to get the large pdf file that announces this release and tells us what’s new.

- 2.17-10 of the computer algebra package, MAGMA, has been release.

Freeware and Open Source Software Releases

- If you are working with statistics then R is probably the software package that you need to be using, no matter what operating system you prefer. Version 2.13.1 was released on July 8th and details of what’s new can be found at http://cran.r-project.org/bin/windows/base/NEWS.R-2.13.1.html

- Scilab, a high quality free alternative to MATLAB, has seen a minor upgrade. Version 5.3.3 corrects a couple of bugs.

- Numpy is a Python add-on that is almost essential if you plan to use Python for numerical computing. It adds support for vectors and matrices to Python along with facilities such as random number generation and FFTs. Numpy was upgraded to version 1.6.1, a bugfix release, on July 20th.

- PLASMA (The Parallel Linear Algebra for Scalable Multi-core Architectures) has seen a bug-fix release with version 2.4.1

Blog articles

- StrongInference has put up a great article about the upcoming IPython 0.11. IPython is an enhanced interactive python shell that includes things such as matplotlib integration, interactive parallel computing and more. It’s great for the sort of interactive scientific calculations that people might otherwise use MATLAB and Mathematica for.

- The Mathworks show us how to calculate the Mandelbrot factal on a GPU using the Parallel Computing Toolbox.

- Wolfram Research officially launch the CDF document format. Learn how to embed them into webpages.

- Over at Playing with Mathematica, Sol has gone crazy with Mathematica clocks.

This is part 1 of an ongoing series of articles about MATLAB programming for GPUs using the Parallel Computing Toolbox. The introduction and index to the series is at https://www.walkingrandomly.com/?p=3730.

Have you ever needed to take the sine of 100 million random numbers? Me either, but such an operation gives us an excuse to look at the basic concepts of GPU computing with MATLAB and get an idea of the timings we can expect for simple elementwise calculations.

Taking the sine of 100 million numbers on the CPU

Let’s forget about GPUs for a second and look at how this would be done on the CPU using MATLAB. First, I create 100 million random numbers over a range from 0 to 10*pi and store them in the variable cpu_x;

cpu_x = rand(1,100000000)*10*pi;

Now I take the sine of all 100 million elements of cpu_x using a single command.

cpu_y = sin(cpu_x)

I have to confess that I find the above command very cool. Not only are we looping over a massive array using just a single line of code but MATLAB will also be performing the operation in parallel. So, if you have a multicore machine (and pretty much everyone does these days) then the above command will make good use of many of those cores. Furthermore, this kind of parallelisation is built into the core of MATLAB….no parallel computing toolbox necessary. As an aside, if you’d like to see a list of functions that automatically run in parallel on the CPU then check out my blog post on the issue.

So, how quickly does my 4 core laptop get through this 100 million element array? We can find out using the MATLAB functions tic and toc. I ran it three times on my laptop and got the following

>> tic;cpu_y = sin(cpu_x);toc Elapsed time is 0.833626 seconds. >> tic;cpu_y = sin(cpu_x);toc Elapsed time is 0.899769 seconds. >> tic;cpu_y = sin(cpu_x);toc Elapsed time is 0.916969 seconds.

So the first thing you’ll notice is that the timings vary and I’m not going to go into the reasons why here. What I am going to say is that because of this variation it makes sense to time the calculation a number of times (20 say) and take an average. Let’s do that

sintimes=zeros(1,20);

for i=1:20;tic;cpu_y = sin(cpu_x);sintimes(i)=toc;end

average_time = sum(sintimes)/20

average_time =

0.8011

So, on average, it takes my quad core laptop just over 0.8 seconds to take the sine of 100 million elements using the CPU. A couple of points:

- I note that this time is smaller than any of the three test times I did before running the loop and I’m not really sure why. I’m guessing that it takes my CPU a short while to decide that it’s got a lot of work to do and ramp up to full speed but further insights are welcomed.

- While staring at the CPU monitor I noticed that the above calculation never used more than 50% of the available virtual cores. It’s using all 4 of my physical CPU cores but perhaps if it took advantage of hyperthreading I’d get even better performance? Changing OMP_NUM_THREADS to 8 before launching MATLAB did nothing to change this.

Taking the sine of 100 million numbers on the GPU

Just like before, we start off by using the CPU to generate the 100 million random numbers1

cpu_x = rand(1,100000000)*10*pi;

The first thing you need to know about GPUs is that they have their own memory that is completely separate from main memory. So, the GPU doesn’t know anything about the array created above. Before our GPU can get to work on our data we have to transfer it from main memory to GPU memory and we acheive this using the gpuArray command.

gpu_x = gpuArray(cpu_x); %this moves our data to the GPU

Once the GPU can see all our data we can apply the sine function to it very easily.

gpu_y = sin(gpu_x)

Finally, we transfer the results back to main memory.

cpu_y = gather(gpu_y)

If, like many of the GPU articles you see in the literature, you don’t want to include transfer times between GPU and host then you time the calculation like this:

tic gpu_y = sin(gpu_x); toc

Just like the CPU version, I repeated this calculation several times and took an average. The result was 0.3008 seconds giving a speedup of 2.75 times compared to the CPU version.

If, however, you include the time taken to transfer the input data to the GPU and the results back to the CPU then you need to time as follows

tic gpu_x = gpuArray(cpu_x); gpu_y = sin(gpu_x); cpu_y = gather(gpu_y) toc

On my system this takes 1.0159 seconds on average– longer than it takes to simply do the whole thing on the CPU. So, for this particular calculation, transfer times between host and GPU swamp the benefits gained by all of those CUDA cores.

Benchmark code

I took the ideas above and wrote a simple benchmark program called sine_test. The way you call it is as follows

[cpu,gpu_notransfer,gpu_withtransfer] = sin_test(numrepeats,num_elements]

For example, if you wanted to run the benchmarks 20 times on a 1 million element array and return the average times then you just do

>> [cpu,gpu_notransfer,gpu_withtransfer] = sine_test(20,1e6)

cpu =

0.0085

gpu_notransfer =

0.0022

gpu_withtransfer =

0.0116

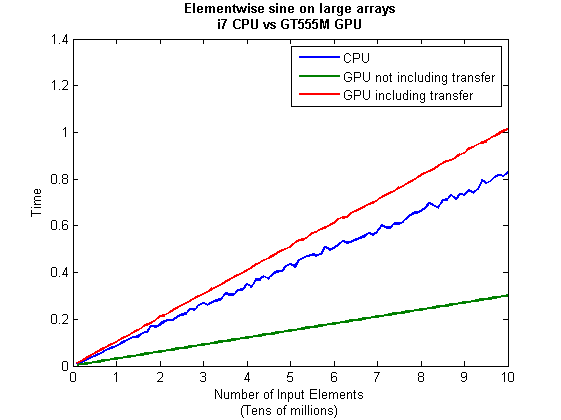

I then ran this on my laptop for array sizes ranging from 1 million to 100 million and used the results to plot the graph below.

But I wanna write a ‘GPUs are awesome’ paper

So far in this little story things are not looking so hot for the GPU and yet all of the ‘GPUs are awesome’ papers you’ve ever read seem to disagree with me entirely. What on earth is going on? Well, lets take the advice given by csgillespie.wordpress.com and turn it on its head. How do we get awesome speedup figures from the above benchmarks to help us pump out a ‘GPUs are awesome paper’?

0. Don’t consider transfer times between CPU and GPU.

We’ve already seen that this can ruin performance so let’s not do it shall we? As long as we explicitly say that we are not including transfer times then we are covered.

1. Use a singlethreaded CPU.

Many papers in the literature compare the GPU version with a single-threaded CPU version and yet I’ve been using all 4 cores of my processor. Silly me…let’s fix that by running MATLAB in single threaded mode by launching it with the command

matlab -singleCompThread

Now when I run the benchmark for 100 million elements I get the following times

>> [cpu,gpu_no,gpu_with] = sine_test(10,1e8)

cpu =

2.8875

gpu_no =

0.3016

gpu_with =

1.0205

Now we’re talking! I can now claim that my GPU version is over 9 times faster than the CPU version.

2. Use an old CPU.

My laptop has got one of those new-fangled sandy-bridge i7 processors…one of the best classes of CPU you can get for a laptop. If, however, I was doing these tests at work then I guess I’d be using a GPU mounted in my university Desktop machine. Obviously I would compare the GPU version of my program with the CPU in the Desktop….an Intel Core 2 Quad Q9650. Heck its running at 3Ghz which is more Ghz than my laptop so to the casual observer (or a phb) it would look like I was using a more beefed up processor in order to make my comparison fairer.

So, I ran the CPU benchmark on that (in singleCompThread mode obviously) and got 4.009 seconds…noticeably slower than my laptop. Awesome…I am definitely going to use that figure!

I know what you’re thinking…Mike’s being a fool for the sake of it but csgillespie.wordpress.com puts it like this ‘Since a GPU has (usually) been bought specifically for the purpose of the article, the CPU can be a few years older.’ So, some of those ‘GPU are awesome’ articles will be accidentally misleading us in exactly this manner.

3. Work in single precision.

Yeah I know that you like working with double precision arithmetic but that slows GPUs down. So, let’s switch to single precision. Just argue in your paper that single precision is OK for this particular calculation and we’ll be set. To change the benchmarking code all you need to do is change every instance of

rand(1,num_elems)*10*pi;

to

rand(1,num_elems,'single')*10*pi;

Since we are reputable researchers we will, of course, modify both the CPU and GPU versions to work in single precision. Timings are below

- Desktop at work (single thread, single precision): 3.49 seconds

- Laptop GPU (single precision, not including transfer): 0.122 seconds

OK, so switching to single precision made the CPU version a bit faster but it’s more than doubled GPU performance. We can now say that the GPU version is over 28 times faster than the CPU version. Now we have ourselves a bone-fide ‘GPUs are awesome’ paper.

4. Use the best GPU we can find

So far I have been comparing the CPU against the relatively lowly GPU in my laptop. Obviously, however, if I were to do this for real then I’d get a top of the range Tesla. It turns out that I know someone who has a Tesla C2050 and so we ran the single precision benchmark on that. The result was astonishing…0.0295 seconds for 100 million numbers not including transfer times. The double precision performance for the same calculation on the C2050 was 0.0524 seonds.

5. Write the abstract for our ‘GPUs are awesome’ paper

We took an Nvidia Tesla C2050 GPU and mounted it in a machine containing an Intel Quad Core CPU running at 3Ghz. We developed a program that performs element-wise trigonometry on arrays of up to 100 million single precision random numbers using both the CPU and the GPU. The GPU version of our code ran up to 118 times faster than the CPU version. GPUs are awesome!

Results from different CPUs and GPUs. Double precision, multi-threaded

I ran the sine_test benchmark on several different systems for 100 million elements. The CPU was set to be multi-threaded and double precision was used throughout.

sine_test(10,1e8)

GPUs

- Tesla C2050, Linux, 2011a – 0.7487 seconds including transfers, 0.0524 seconds excluding transfers.

- GT 555M – 144 CUDA Cores, 3Gb RAM, Windows 7, 2011a (My laptop’s GPU) -1.0205 seconds including transfers, 0.3016 seconds excluding transfers

CPUs

- Intel Core i7-880 @3.07Ghz, Linux, 2011a – 0.659 seconds

- Intel Core i7-2630QM, Windows 7, 2011a (My laptop’s CPU) – 0.801 seconds

- Intel Core 2 Quad Q9650 @ 3.00GHz, Linux – 0.958 seconds

Conclusions

- MATLAB’s new GPU functions are very easy to use! No need to learn low-level CUDA programming.

- It’s very easy to massage CPU vs GPU numbers to look impressive. Read those ‘GPUs are awesome’ papers with care!

- In real life you have to consider data transfer times between GPU and CPU since these can dominate overall wall clock time with simple calculations such as those considered here. The more work you can do on the GPU, the better.

- My laptop’s GPU is nowhere near as good as I would have liked it to be. Almost 6 times slower than a Tesla C2050 (excluding data transfer) for elementwise double precision calculations. Data transfer times seem to about the same though.

Next time

In the next article in the series I’ll look at an element-wise calculation that really is worth doing on the GPU – even using the wimpy GPU in my laptop – and introduce the MATLAB function arrayfun.

Footnote

1 – MATLAB 2011a can’t create random numbers directly on the GPU. I have no doubt that we’ll be able to do this in future versions of MATLAB which will change the nature of this particular calculation somewhat. Then it will make sense to include the random number generation in the overall benchmark; transfer times to the GPU will be non-existant. In general, however, we’ll still come across plenty of situations where we’ll have a huge array in main memory that needs to be transferred to the GPU for further processing so what we learn here will not be wasted.

Hardware / Software used for the majority of this article

- Laptop model: Dell XPS L702X

- CPU: Intel Core i7-2630QM @2Ghz software overclockable to 2.9Ghz. 4 physical cores but total 8 virtual cores due to Hyperthreading.

- GPU: GeForce GT 555M with 144 CUDA Cores. Graphics clock: 590Mhz. Processor Clock:1180 Mhz. 3072 Mb DDR3 Memeory

- RAM: 8 Gb

- OS: Windows 7 Home Premium 64 bit. I’m not using Linux because of the lack of official support for Optimus.

- MATLAB: 2011a with the parallel computing toolbox

Other GPU articles at Walking Randomly

- GPU Support in Mathematica, Maple, MATLAB and Maple Prime – See the various options available

- Insert new laptop to continue – My first attempt at using the GPU functionality in MATLAB

- NVIDIA lets down Linux laptop users – and how an open source project saves the day

Thanks to various people at The Mathworks for some useful discussions, advice and tutorials while creating this series of articles.

These days it seems that you can’t talk about scientific computing for more than 5 minutes without somone bringing up the topic of Graphics Processing Units (GPUs). Originally designed to make computer games look pretty, GPUs are massively parallel processors that promise to revolutionise the way we compute.

A brief glance at the specification of a typical laptop suggests why GPUs are the new hotness in numerical computing. Take my new one for instance, a Dell XPS L702X, which comes with a Quad-Core Intel i7 Sandybridge processor running at up to 2.9Ghz and an NVidia GT 555M with a whopping 144 CUDA cores. If you went back in time a few years and told a younger version of me that I’d soon own a 148 core laptop then young Mike would be stunned. He’d also be wondering ‘What’s the catch?’

Of course the main catch is that all processor cores are not created equally. Those 144 cores in my GPU are, individually, rather wimpy when compared to the ones in the Intel CPU. It’s the sheer quantity of them that makes the difference. The question at the forefront of my mind when I received my shiny new laptop was ‘Just how much of a difference?’

Now I’ve seen lots of articles that compare CPUs with GPUs and the GPUs always win…..by a lot! Dig down into the meat of these articles, however, and it turns out that things are not as simple as they seem. Roughly speaking, the abstract of some them could be summed up as ‘We took a serial algorithm written by a chimpanzee for an old, outdated CPU and spent 6 months parallelising and fine tuning it for a top of the line GPU. Our GPU version is up to 150 times faster!‘

Well it would be wouldn’t it?! In other news, Lewis Hamilton can drive his F1 supercar around Silverstone faster than my dad can in his clapped out 12 year old van! These articles are so prevalent that csgillespie.wordpress.com recently published an excellent article that summarised everything you should consider when evaluating them. What you do is take the claimed speed-up, apply a set of common sense questions and thus determine a realistic speedup. That factor of 150 can end up more like a factor of 8 once you think about it the right way.

That’s not to say that GPUs aren’t powerful or useful…it’s just that maybe they’ve been hyped up a bit too much!

So anyway, back to my laptop. It doesn’t have a top of the range GPU custom built for scientific computing, instead it has what Notebookcheck.net refers to as a fast middle class graphics card for laptops. It’s got all of the required bits though….144 cores and CUDA compute level 2.1 so surely it can whip the built in CPU even if it’s just by a little bit?

I decided to find out with a few randomly chosen tests. I wasn’t aiming for the kind of rigor that would lead to a peer reviewed journal but I did want to follow some basic rules at least

- I will only choose algorithms that have been optimised and parallelised for both the CPU and the GPU.

- I will release the source code of the tests so that they can be critised and repeated by others.

- I’ll do the whole thing in MATLAB using the new GPU functionality in the parallel computing toolbox. So, to repeat my calculations all you need to do is copy and paste some code. Using MATLAB also ensures that I’m using good quality code for both CPU and GPU.

The articles

This is the introduction to a set of articles about GPU computing on MATLAB using the parallel computing toolbox. Links to the rest of them are below and more will be added in the future.

- Elementwise operations on the GPU #1 – Basic commands using the PCT and how to write a ‘GPUs are awesome’ paper; no matter what results you get!

- Elementwise operations on the GPU #2 – A slightly more involved example showing a useful speed-up compared to the CPU. An introduction to MATLAB’s arrayfun

- Optimising a correlated asset calculation on MATLAB #1: Vectorisation on the CPU – A detailed look at a port from CPU MATLAB code to GPU MATLAB code.

- Optimising a correlated asset calculation on MATLAB #2: Using the GPU via the PCT – A detailed look at a port from CPU MATLAB code to GPU MATLAB code.

- Optimising a correlated asset calculation on MATLAB #3: Using the GPU via Jacket – A detailed look at a port from CPU MATLAB code to GPU MATLAB code.

External links of interest to MATLABers with an interest in GPUs

- The Parallel Computing Toolbox (PCT) – The Mathwork’s MATLAB add-on that gives you CUDA GPU support.

- Mike Gile’s MATLAB GPU Blog – from the University of Oxford

- Accelereyes – Developers of ‘Jacket’, an alternative to the parallel computing toolbox.

- A Mandelbrot Set on the GPU – Using the parallel computing toolbox to make pretty pictures…FAST!

- GP-you.org – A free CUDA-based GPU toolbox for MATLAB

- Matlab, CUDA and Me – Stu Blair gives various examples of calling CUDA kernels directly from MATLAB

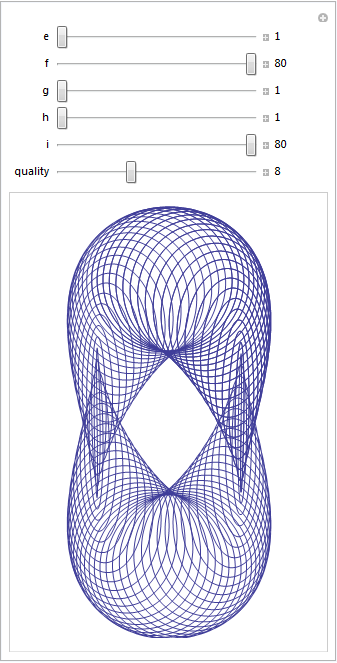

Over at Playing with Mathematica, Sol Lederman has been looking at pretty parametric and polar plots. One of them really stood out for me, the one that Sol called ‘Slinky Thing’ which could be generated with the following Mathematica command.

ParametricPlot[{Cos[t] - Cos[80 t] Sin[t], 2 Sin[t] - Sin[80 t]}, {t, 0, 8}]

Out of curiosity I parametrised some of the terms and wrapped the whole thing in a Manipulate to see what I could see. I added 5 controllable parameters by turning Sol’s equations into

{Cos[e t] - Cos[f t] Sin[g t], 2 Sin[h t] - Sin[i t]}, {t, 0, 8}

Each parameter has its own slider (below). If you have Mathematica 8, or the free cdf player, installed then the image below will turn into an interactive applet which you can use to explore the parameter space of these equations.

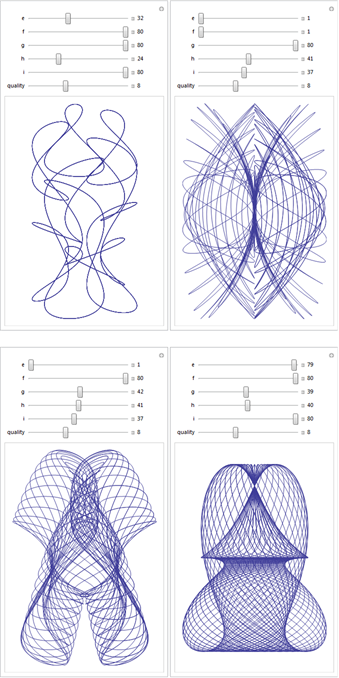

Here are four of my favourites. If you come up with one that you particularly like then feel free to let me know what the parameters are in the comments.

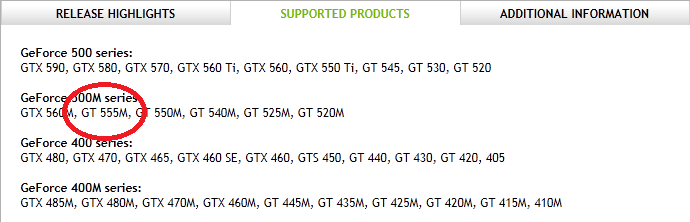

I recently maxed out my credit card in order to treat myself to a shiny new Dell XPS L720X laptop that comes complete with Intel i7 sandy bridge processor and Nvidia GeForce GT 555M. The NVidia graphics card was one of the biggest selling points for me because I wanted to do some GPU work at home and on the train using both CUDA and OpenCL. I get asked about these technologies a lot by researchers at The University of Manchester and I wanted to beef up my experience levels.

I wanted this machine to be dual boot Windows 7 and Linux so, before I shelled out my hard-earned cash, I thought I would check that Nvidia’s Linux driver supported the GT 555M. A quick look at their official driver page confirmed that it did so I handed over the credit card. After all, if Nvidia themselves say that it is supported then you’d expect it to be supported right?

Wrong! Here’s my story.

I installed Ubuntu 11.04 from DVD without a hitch and updated all packages to the very latest versions. I then hopped over to NVidia’s website, got the driver (version 275.09.07) and installed it. I’ve gone through this process dozens of times on Desktop machines at work and wasn’t expecting any problems but boy did I get problems. After installing NVidia’s driver, the Dell simply would not boot into Linux. Not only that but it never seemed to fail in exactly the same place twice…the boot process would start just fine and then it would crash…seemingly at random. So, off to the forums I went where I quickly discovered that my system was not as supported as I originally thought.

You see, my laptop has two graphics systems on it: A relatively low-powered Intel one and the NVidia one. It also comes with some cool sounding technology called Optimus that helps save battery power on systems like mine. Rather than explain the details of Optimus, I’m just going to refer you to both Nvidia’s web page about it along with the Wikipedia page.

Here’s the kicker…Nvidia’s Linux driver does not support optimus, even though Optimus is Nvidia’s own technology. They even say that they don’t support it in the Additional Information tab. Furthermore, they have no plans to support it. Sadly, I didn’t even realise that my new laptop was an Optimus laptop until I tried to get the Nvidia drivers on it.

If only I had thought to myself ‘Well, Nvidia may say that they support the 555M but do they really mean it?’ If I mis-trusted the information given on the supported products page then perhaps then I would have read the further information tab and trawled the forums. I chose to trust Nvidia and assume that when a product was listed under ‘supported products’ then I didn’t need to worry. Well you live and learn I guess.

Project Bumblebee

One of the fantastic things about the Linux community is that even if you are let down by your hardware vendor then someone, somewhere may well come to your rescue. For Nvidia Optimus, that someone is Martin Juhl. Martin’s project, Bumblebee, brings Optimus support to Linux which is useful since it seems that Nvidia can’t be bothered!

Installation for Ubuntu users is easy. All you need to do is open up a terminal, type the following and follow the instructions to download and install both the Nvidia drivers along with bumblebee.

sudo apt-add-repository ppa:mj-casalogic/bumblebee sudo apt-get update && sudo apt-get install bumblebee

To run an application, glxgears for example, you just type the following at the command line

optirun glxgears

Sadly for me this didn’t work. All I got was the following

* Starting Bumblebee X server bumblebee _PS0 Enabling nVidia Card Succeded. [ OK ] * Stopping Bumblebee X server bumblebee _DSM Disabling nVidia Card Succeded. _PS3 Disabling nVidia Card Succeded.

Nothing else happened. I’d report it as a bug-report but it seems that someone with a very similar configuration to me has already done so and work is being done on it as we speak. Plenty of other people have reported success with bumblebee though and I am confident that I will be up and running soon. As soon as I am up and running I’ll owe the developer of bumblebee a beer!

Update 11th July: The bumblebee bug mentioned above has been fixed. I can now run apps via optirun. Not done much more than run glxgears though so far.

Welcome to the 6th edition of A Month Of Math Software where I take a look at all the shiny new things that are available for a geek like me to play with. Previous editions are available here.

Commercial Mathematics Software

- Mark 23 of the venerable NAG Fortran Library has been released. The new version contains over 100 new routines and there is some very nice stuff to be found. They’ve beefed up their global optimization chapter for example by improving their multi-level coordinate search routine to allow equality bound constraints and also by adding a particle swarm optimizer.

- More news from NAG – They’ve released their Optimization routines as a R-module! Note that you need a licensed version of the NAG Fortran Library installed to use them.

Free Mathematics Software

- Octave – This open source alternative to MATLAB has seen a very minor update to version 3.4.2 which fixes some minor installation problems.

- Pari – Version 2.5 of this computer algebra system which specialises in number theory has been released. Get the changelog here.

- PLASMA – PLASMA stands for Parallel Linear Algebra for Scalable Multi-core Architectures. The version 2.4 changelog is available at http://icl.cs.utk.edu/plasma/news/news.html?id=270

Mathematical Software on GPUs

Unless you’ve been hiding under a scientific computing rock for the last couple of years you’ll know that GPUs (Graphical Processing Units) are how everyone wants to do their computing these days. For the right kind of problem, GPUs can be faster than standard CPUs by an order of magnitude or more. Access to such cheap computational power fundamentally changes the type of mathematical and scientific problems that we can realistically tackle– which is nice! Unfortunately, however, programming GPUs is not particularly easy so it’s a good job that various research groups and software companies have stepped in to do some of heavy lifting for us. Last month I mentioned a new release of NAG’s GPU offering and April saw a release candidate of v1.0 of MAGMA (open source multicore+GPU linear algebra). Here’s what happened in June

- LibJacket v1.0 relased – This is a new product from Accelereyes, the company who have been doing GPU acceleration on MATLAB for a fee years now. With LibJacket they’ve gone a step further and released a C/C++ library. See their wiki for a list of supported functions.

Compilers Compilers Compilers

OK, so this isn’t mathematical software news really but good compilers are essential for fast mathematical code. There have been a couple of compiler-based news items that have got me excited this month.

- Pathscale EKOPath 4 compilers go open source – If you have a Linux system and are running some open-source mathematical software then there is a good chance that your software was compiled with the standard open source C-compiler, gcc. Now, gcc is a fantastic compiler but, according to many benchmarks, Pathscale’s compilers produce faster executables than gcc for many computationally intensive operations. The practical upshot for most of us is that some of our favourite software packages might be getting a free speed-hit in the near future. There’s a huge discussion thread about this over at phoronix.com.

- Intel have released a new open source compiler called ispc. According to Intel’s site “ispc is a new compiler for “single program, multiple data” (SPMD) programs.” The example program is a simple Mandelbrot renderer. Make those SIMD lanes in your CPU work harder for you!

Mathematical software elsewhere on the web

- Why you shouldn’t use Excel 2007 for doing statistics – My answer would have been ‘because other statisticians will point and laugh at you if you do’ but it turns out that there are good mathematical reasons to not use it as well. This is an old article but worthy of further attention in my opinion.

- In defense of inefficient scientific code – An article I wrote last month about why code that runs inefficiently can be OK. It proved to be something of a hit on twitter so I thought I’d add it here in case you’re interested.

- Everything You Ever Wanted to Know About Floating Point but Were Afraid to Ask

- Stand-alone code for numerical computing – because sometimes you don’t want to install a massive numerical library just to get some Gamma distributed random variates (among other things).

- Bouncing Balls

- Storing banded matrices for speed

The Pendulum Waves video is awesome and the system has been simulated in Mathematica (twice), Maple and probably every other programming language by now. During a bout of insomnia I used the Mathematica code written by Matt Henderson as inspiration and made a simple MATLAB version. Here’s the video

Here’s the code.

freqs = 5:15;

num = numel(freqs);

lengths = 1./sqrt(freqs);

piover6 = pi/6;

figure

axis([-0.3 0.3 -0.5 0]);

axis off;

org=zeros(size(freqs));

xpos=zeros(size(freqs));

ypos=zeros(size(freqs));

pendula = line([org;org],[org;org],'LineWidth',1,'Marker','.','MarkerSize',25 ...

,'Color',[0 0 0],'visible','off' );

% Open the avi file

vidObj = VideoWriter('pendula_wave.avi');

open(vidObj);

count =0;

for t=0:0.001:1

count=count+1;

omegas = 2*pi*freqs*t;

xpos = sin(piover6*cos(omegas)).*lengths;

ypos = -cos(piover6*cos(omegas)).*lengths;

for i=1:num

set(pendula(i),'visible','on');

set(pendula(i),'XData',[0 xpos(i)]);

set(pendula(i),'YData',[0 ypos(i)]);

drawnow

end

currFrame = getframe;

writeVideo(vidObj,currFrame)

F(i) = getframe;

end

% Close the file.

close(vidObj);