Python/NAG Part 5 – Callback functions with communication.

This is part 5 of a series of articles devoted to demonstrating how to call the Numerical Algorithms Group (NAG) C library from Python. Click here for the index to this series.

The Optimisation chapter of the NAG C library is one of the most popular chapters in my experience. It is also one of the most complicated from a programming point of view but if you have been working through this series from the beginning then you already know all of the Python techniques you need. From this point on it’s just a matter of increased complexity.

As always, however, I like to build the complexity up slowly so let’s take a look at possibly the simplest NAG optimisation routine – e04abc. This routine minimizes a function of one variable, using function values only. In other words – you don’t need to provide it with derivative information which simplifies the programming at the cost of performance.

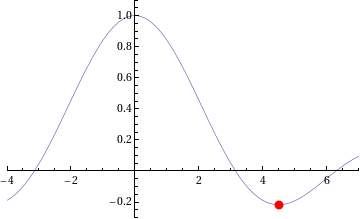

The example Python code I am going to give will solve a very simple local optimisation problem – The minimisation of the function sin(x)/x in the range [3.5,5.0] or, put another way, what are the x and y values of the centre of the red dot in the picture below.

The NAG/Python code to do this is given below (or you can download it here).

#!/usr/bin/env python #Example 4 using NAG C library and ctypes - e04abc #Mike Croucher - April 2008 #Concepts : Communication callback functions that use the C prototype void funct(double, double *,Nag_comm *)

from ctypes import *

from math import *

libnag = cdll.LoadLibrary("/opt/NAG/cllux08dgl/lib/libnagc_nag.so.8")

e04abc = libnag.e04abc

e04abc.restype=None

class Nag_Comm(Structure):

_fields_ = [("flag", c_int),

("first", c_int),

("last", c_int),

("nf",c_int),

("it_prt",c_int),

("it_maj_prt",c_int),

("sol_sqp_prt",c_int),

("sol_prt",c_int),

("rootnode_sol_prt",c_int),

("node_prt",c_int),

("rootnode_prt",c_int),

("g_prt",c_int),

("new_lm",c_int),

("needf",c_int),

("p",c_void_p),

("iuser",POINTER(c_int) ),

("user",POINTER(c_double) ),

("nag_w",POINTER(c_double) ),

("nag_iw",POINTER(c_int) ),

("nag_p",c_void_p),

("nag_print_w",POINTER(c_double) ),

("nag_print_iw",POINTER(c_int) ),

("nrealloc",c_int)

]

#define python objective function def py_obj_func(x,ans,comm): ans[0] = sin(x) / x return

#create the type for the c callback function C_FUNC = CFUNCTYPE(None,c_double,POINTER(c_double),POINTER(Nag_Comm))

#now create the c callback function c_func = C_FUNC(py_obj_func)

#Set up the problem parameters comm = Nag_Comm() #e1 and e2 control the solution accuracy. Setting them to 0 chooses the default values. #See the documentation for e04abc for more details e1 = c_double(0.0) e2 = c_double(0.0) #a and b define our starting interval a = c_double(3.5) b = c_double(5.0) #xt will contain the x value of the minimum xt=c_double(0.0) #f will contain the function value at the minimum f=c_double(0.0) #max_fun is the maximum number of function evaluations we will allow max_fun=c_int(100) #Yet again I cheat for fail parameter. fail=c_long(0)

e04abc(c_func,e1,e2,byref(a),byref(b),max_fun,byref(xt),byref(f),byref(comm),fail)

print "The minimum is in the interval [%s,%s]" %(a.value,b.value) print "The estimated result = %s" % xt.value print "The function value at the minimum is %s " % f.value print "%s evaluations were required" % comm.nf

If you run this code then you should get the following output

The minimum is in the interval [4.4934093959,4.49340951173] The estimated result = 4.49340945381 The function value at the minimum is -0.217233628211 10 evaluations were required

IFAIL oddness

My original version of this code used the trick of setting the NAG IFAIL parameter to c_int(0) to save me from worrying about the NagError structure (this is first explained in part 1) but when this example was tested it didn’t work on 64bit machines although it worked just fine on 32bit machines. Changing it to c_long(0) (as I have done in this example) made it work on both 32 and 64bit machines.

What I find odd is that in all my previous examples, c_int(0) worked just fine on both architectures. Eventually I will cover how to use the IFAIL parameter ‘properly’ but for now it is sufficient to be aware of this issue.

Scary Structures

Although this is the longest piece of code we have seen so far in this series, it contains no new Python/ctypes techniques at all. The only major difficulty I faced when writing it for the first time was making sure that I got the specification of the Nag_Comm structure correct by carefully working though the C specification of it in the nag_types.h file (included with the NAG C library installation) and transposing it to Python.

One of the biggest hurdles you will face when working with the NAG C library and Python is getting these structure definitions correct and some of them are truly HUGE. If you can get away with it then you are advised to copy and paste someone else’s definitions rather than create your own from scratch. In a later part of this series I will be releasing a Python module called PyNAG which will predefine some of the more common structures for you.

Callback functions with Communication

The objective function (that is, the function we want to minimise) is implemented as a callback function but it is a bit more complicated than the one we saw in part 3. This NAG routine expects the C prototype of your objective function to look like this:

void funct(double xc, double *fc, Nag_Comm *comm)

- xc is an input argument and is the point at which the value of f(x) is required.

- fc is an output argument and is the value of the function f at the current point x. Note that it is a pointer to double.

- Is a pointer to a structure of type Nag_Comm. Your function may or may not use this directly but the NAG routine almost certainly will. It is through this structure that the NAG routine can communicate various things to and from your function as and when is necessary.

so our C-types function prototype looks like this

C_FUNC = CFUNCTYPE(None,c_double,POINTER(c_double),POINTER(Nag_Comm))

whereas the python function itself is

#define python objective function def py_obj_func(x,ans,comm): ans[0] = sin(x) / x return

Note that we need to do

ans[0] = sin(x) / x

rather than simply

ans = sin(x) / x

since ans is a pointer to double rather than just a double.

In this particular example I have only used the simplest feature provided by the Nag_Comm structure and that is to ask it how many times the objective function was called.

print "%s evaluations were required" % comm.nf

That’s it for this part of the series. Next time I’ll be looking at a more advanced optimisation routine. Feel free to post any comments, questions or suggestions.

Let’s compare it with OpenOpt code (http://openopt.org, BTW license BSD allows using it in open- and closed-code as well):

from numpy import *

from openopt import *

p = NLP(lambda x: sin(x)/x, 1, lb = 3.5, ub=5)

r = p.solve(‘goldenSection’)

print ‘optimal point:’, r.xf, ‘optimal value:’, r.ff

—————————————————–

solver: goldenSection problem: unnamed goal: minimum

iter objFunVal

0 8.415e-001

10 -2.172e-001

20 -2.172e-001

30 -2.172e-001

31 -2.172e-001

istop: 3 (|| X[k] – X[k-1] || < xtol)

Solver: Time Elapsed = 0.05 CPU Time Elapsed = 0.0383300112165

objFunValue: -0.21723363 (feasible, max constraint = 0)

optimal point: [ 4.4934095] optimal value: -0.217233628211

#####

Setting of non-default parameters seems to be easier:parameters:

p.maxIter = 100, p.maxFunEvals = 1000, p.maxTime = 1, p.maxCPUTime = 1

p.xtol = 1e-7, p.ftol = 1e-7

p.plot = 1, p.iprint = 5

or just

p = NLP(…, iprint = 5, plot = True, maxIter = 100, etc )

Hi

Thanks for that – OpenOpt has been mentioned by commentators on here a few times now and looks like a nice piece of software. I fully intend on looking into it in more detail in the near future (maybe you could help with that if you like – feel free to get in touch by email).

It doesn’t surprise me, however, that solving a problem using a native Python library uses fewer lines of code than something that interfaces to a C library. With a little work I guess that one could come up with a Pythonic interface to the NAG library that was as easy to use as OpenOpt if that is what you wanted to do (although the license conditions wouldn’t be so liberal of course since NAG is commercial).

Where the NAG solution ‘wins’ in my opinion is when you have finished prototyping an algorithm and you want to move your code to some ‘big iron’ – a supercomputer in other words. Rightly or wrongly the operators of many large compute clusters may well ask you to recode your solution in something such as C or Fortran since they are more efficient.

If you have prototyped with NAG/Fortran or NAG/C then you will be using EXACTLY the same solvers and numerical routines on your big iron, low level code as you were on your laptop with Python.

Cheers,

Mike

OpenOpt has already connections for lots of C/C++/Fortran-written solvers. The problem of optimization Python funcs on computer with several processors is first of all like that: you can’t execute several Python functions (objective functions or non-linear constraints) in parallel, when this is possible from C/Fortran (eg solver PSwarm can use multiCPU when objective function is coded on C, but for PSwarm Python API it’s not valid). You could use specialized Python modules (like pyprocessing), but their capabilities are very limited, at least for now, and for complex functions other methods should be used, while they are efficient for very, very costly functions only.

On the other hand, it doesn’t matter when matrix problems are considered, like LP, QP, MILP, SOCP, SDP etc.

But as for NAG optimization routines, I just can’t remember anything essential, i.e. well-known and comparable to CPLEX, IPOPT, mosek, KNITRO etc.

If you wish, I could create a subdirectory in openopt/solvers for openopt connections to your Python wrappers for NAG, and give you subversion write access – so you could do whatever you want there.

But still I can hardly believe you will earn any essential money with that.

mosek had tried – and, of course, he failed, as it was expected (http://forum.openopt.org/viewtopic.php?pid=50). I don’t know any essential commercial software (neither optimization nor anything else) that earned some money on Python wrappers, mb except of CPLEX (but I’m not sure here as well), because free LP/MILP solvers are too far from CPLEX yet. Seems like Python for science progammers tend to avoid using commercial software as long as possible, and ususally they succeed in it.

Let me also note – there are some efforts of involving multiCPU with Python like this:

http://forum.openopt.org/viewtopic.php?id=86

Hi Dmitrey

Thanks for the kind offer but unfortunately I have to decline – I have already spent a lot more time on this particular project than I originally intended.

As for earning money with this stuff…I haven’t earned any so far and I don’t mind if I never do. You’ll notice that I am not selling anything here and I don’t work for NAG ;)

I started working out how to interface NAG with Python because a postgraduate at my University asked if it was possible. Turns out it is :) These articles are just a write-up of my investigations and if they turn out to be useful to others then that’s fantastic but if not, well, I won’t lose too much sleep over it.

As long as the codes I write about are correct then I am happy. Of course if they prove to be useful to anyone other than myself and the couple of people who originally asked for them then that will be a bonus.

As for the usefulness/quality of NAG compared to the other libraries you mention, I simply do not know enough about optimisation to comment but I would welcome any information concerning areas that NAG is perceived to be lacking.

Part of my job at Manchester University is to look after site licenses for engineering and scientific software that the computer centre pays for so I am essentially a customer of NAG (and Wolfram and Mathworks and PTC etc etc). I want the best I can get for the University’s money so if I learned that NAG could be better I would pass it on to them and ask WHY they are not better.

If any of the NAG users I serve complained about a lack of functionality then I would (and have done so in the past) nag NAG as much as possible to get the extra functionality included in their next release. Trust me, I am not an easy customer to please. However, I find that NAG are very responsive and several new functions in their latest Fortran release are (partially) there thanks to requests from us. I also like dealing with them because of the amount of work they have done for the open-source community (they have contributed heavily to LAPACK for example).

Anyway, I have written enough for one comment I think. Thanks for the feedback – I’ll be watching your project with interest :)

Best Wishes,

Mike

Ok, I thought you working for NAG, I have been mesleaded by the chapter “I have been supporting users of the NAG library …” from http://www.walkingrandomly.com/?p=830 . As for “I find that NAG are very responsive” – I guess it’s up to almost any commercial software, who decided new funcs / other features can help them earn more money. In any way, I respect NAG for their contributions to LAPACK.

Nope, I work for a University but working for NAG would be fine too! It’s not evil to make a living from selling software just as it’s not evil to make money from plumbing.

Of course they add new functions that help them earn money….they are a business selling software. They are also one of the best kind – they are a not for profit company! So, all of the money they make goes into paying developers and support staff to produce a better product. As a side effect, the community benefits from the contributions they make (Axiom used to belong to NAG for example and we have already discussed their contributions to LAPACK). Everybody wins!

In my opinion (and that of many,many people at my University) they offer a service that is WORTH paying for. So we pay for it and we will continue to do so.